Governance and accountability

Clear cross-business lines of oversight and accountability, including across artificial intelligence use or development, enables effective risk management.

On this page I tēnei whārangi

Assembling a team

A variety of roles and expertise is useful to support responsible AI. Depending on the size of the organisation, there may not be the human resources to have individuals dedicated to AI-specific roles. AI governance responsibilities may only be a portion of an individual’s role. However, if a team can be assembled, it can be useful to bring together a range of diverse perspectives and expertise.

Leadership around responsible use and/or development of AI systems could include staff with responsibilities or expertise across, for example:

- any strategic leadership, ethics, or AI specific functions your organisation may have available – for example you could engage a senior lead to drive responsible AI efforts

- Security specialist – to provide cybersecurity and other security expertise such as vulnerability management, security assurance and governance, threat detection, data and infrastructure protection and more (see IT and cybersecurity)

- Data and/or AI Governance – to provide advice on appropriate measures to understand and assess data quality, security, algorithms, access permissions, and proper usage of AI systems procured or developed (see Data and modelling)

- Technology/Data Science – to provide data, IT, and technology expertise and deliver on responsible AI processes through operations and/or system development

- Legal and compliance – to provide an updated understanding of relevant laws and regulations and how they may apply, to help secure any required legal permissions, as well as help navigate any contractual arrangements with third party data or system suppliers (see Compliance and legal obligations)

- Privacy – to give guidance on building trust and managing risks related to information privacy laws

- HR/Training – to take responsibility for supporting internal AI education and upskilling, as well as diversity of workforce (see Skills and knowledge building)

- Communications – to understand and help deliver on the best way to discuss the AI approach with customers and other identified stakeholders, providing comfort that the best possible service is being provided and any concerns or risks are being controlled adequately (including through triaging AI related feedback, queries etc.) (see Stakeholder interactions)

These individuals should have the capability to be able to adequately steer responsible AI use and development in the context of the project/s being considered and on an ongoing basis as needed. This includes encouraging alignment across teams, for example towards:

- strategic information sharing

- shared decision-making

- overarching oversight mechanisms;

- development of AI policies and supporting resources (helping to mitigate potential risk explored in AI system specific considerations).

Robust oversight and governance that promotes transparency and explainability of AI use or development enables business users to demonstrate responsible use, and developers to demonstrate that AI systems have been developed in a trustworthy way.

They also assist your ability to collaborate with staff, stakeholders, or third parties to assess, explain or demonstrate, and/or externally review responsible use of AI.

Depending on project scale and resourcing, it could also be appropriate to include impacted or external stakeholders as part of broader engagement efforts, or to ensure diversity in decision-making (for example, through Māori representation). (See Engagement and consultation).

Policies, contracts, or process documentation may need to be updated to match how you are using or developing AI systems.

It is helpful to clearly document who is in charge of what, so everybody knows their role.

Compliance and legal obligations

Businesses should be aware of their legal obligations in relation to any of their operations, including sector-specific regulations.

It is valuable to identify, catalogue and understand all legal obligations that are relevant for use or development (as appropriate) of the AI system. This can include, but is not limited to elements of the following:

| Legislation | Example of potential relevance for AI |

| Commerce Act 1986 | Ensuring AI systems do not engage in practices that restrict competition (e.g. algorithmic pricing collusion). |

| Companies Act 1993 | Upholding Directors duties including due care and diligence and legal and ethical obligations. |

| Consumer Guarantees Act 1993 | Ensuring businesses can uphold obligations to retail customers of goods or services. |

| Contract and Commercial Law Act 2017 | Ensuring use of AI does not impede the governance of contracts and transactions. |

| Fair Trading Act 1986 | Avoiding misleading or deceptive conduct related to outputs or use of AI tools. |

| Human Rights Act 1993 and Bill of Rights Act 1990 | Upholding requirements to avoid discrimination on sex, race, and other protected grounds in protected areas, and protecting civil and political rights. |

| The Privacy Act 2020, and any applicable code of practice | Ensuring responsibilities are upheld for handling personal information (which may be included as part of AI inputs or outputs). Any business interacting with personal information is required to have a privacy officer. Privacy officers are responsible for ensuring compliance with the Privacy Act, dealing with requests for access or correction to personal information, and working with the Privacy Commissioner during complaints investigation. Information for Privacy officers(external link) — Privacy Commissioner |

| Intellectual Property Law including: Designs Act 1953, Copyright Act 1994. | Understanding ownership, protection and licensing of AI outputs, datasets and underlying algorithms. |

| Media Law including: Harmful Digital Communications Act 2015, Films Videos and Publications Classification Act 1993, Broadcasting Act 1989 | Being aware of the potential for use of tools to generate or share abusive and/or unlawful electronic communications. And, if relevant, being prepared to handle takedown obligations. |

Other laws may be relevant depending on the intended and potential uses of the AI system. This could include sector specific laws, regulations and standards.

In New Zealand - other resources and guidelines are available to help understand and comply with various obligations, for example the Privacy Commissioner’s guidance on Information Privacy Principles’ applicability to AI.

Artificial Intelligence and Privacy: What you need to know [PDF 122 KB](external link) — Privacy Commissioner

If your organisation is operating multinationally or globally, it is important to keep up-to-date on local and international developments. These may be different to New Zealand requirements.

Various forms of AI-specific regulation and governance are emerging internationally (complementing more general applicable regulations, such as the EU’s General Data Protection Regulation). For instance, the EU AI Act was the first AI-specific regulation to be enacted, with others emerging internationally. Countries like Australia, Singapore, the UK, and the US have developed resources to support lawful AI practices.

Additionally, institutions such as the OECD and World Economic Forum are also influencing global expectations and standards. Some businesses are also aligning themselves with international technical standards, such as ISO/IEC 42001:2003 Artificial Intelligence Management System.

ISO/IEC 42001:2023(external link) — Standards New Zealand

Professional legal advice should always be sought if needed.

Scenario A: Appliance Alliance

Three mid-sized companies dominate New Zealand’s smart thermostat market (the Appliance Alliance). Each of them separately decide to adopt a third-party AI-based pricing tool (AI Price Software), to stay competitive and optimise revenue.

AI Price Software was marketed as a dynamic pricing solution. It analysed non-public pricing data from each of the three companies and used a shared algorithm to recommend optimal prices for each smart thermostat model. The goal was to maximise margins by responding to market trends in real time.

Initially, the tool appeared to work well. Prices stabilised, and each company reported improved profitability. However, six months in, market analysts noticed something unusual: prices across all three brands were rising in unison and remained high, even during periods of low demand. Competition in the market seemed to vanish, which led to higher prices, poorer quality, and poorer service for consumers and suppliers across the entire market.

A closer look revealed that AI Price Software had effectively facilitated algorithmic collusion. By pooling sensitive pricing data and recommending uniform prices, the software reduced competitive pressure and enabled coordinated pricing – without any direct communication between the companies.

This raised serious legal concerns. The Commerce Commission launched an inquiry, and the companies were found to be at risk of breaching section 30 of the Commerce Act 1986, which prohibits cartel conduct. This type of breach is a criminal offence attracting substantial financial penalties and potential imprisonment.

In response, companies took decisive action:

- immediately ceasing use of AI Price Software

- being accountable to customers about the risk that presented itself and how it is being managed

- legal and technical staff developed procurement guidelines for their respective businesses that help

- training staff to recognise and report irregularities and/or non-compliance in AI systems and their use, including on Commerce Act obligations.

Risk management

AI use and integration can expose vulnerabilities and exacerbate existing risks. That’s why it’s important to have strong ways to spot and fix risks early. Good risk management helps businesses stay safe and make the most of what AI can offer.

Whether using or developing AI systems, businesses should take a balanced approach to risk management. This means, taking account of how AI is being used, what could go wrong, and the context of their AI use and its impact, as well as business risk appetite and resourcing. Conducting a stakeholder impact assessment can help with also identifying who is impacted and possible mitigations.

This guidance can support businesses to identify and understand what risks might be relevant for their context. These could span ethical, legal, operational, reputational and technical risks. Some examples of common AI related risks businesses may face include:

- compromise of personal or otherwise sensitive or confidential information through security vulnerabilities and attacks

- unfair treatment of those impacted by AI-informed actions or decisions due to overly based AI systems and/or human overreliance on those AI systems

- lack of transparency with users or customers as to AI use

- * misinformed action or decision-making based on GenAI ‘hallucinations’.

The Massachusetts Institute of Technology AI Risk Repository is a comprehensive open resource that identifies and categorises AI risk for public use. It reflects that a number of operational, business, compliance and reputational risks can be exacerbated by AI.

What are the risks from Artificial Intelligence?(external link) — MIT AI Rosk Repository

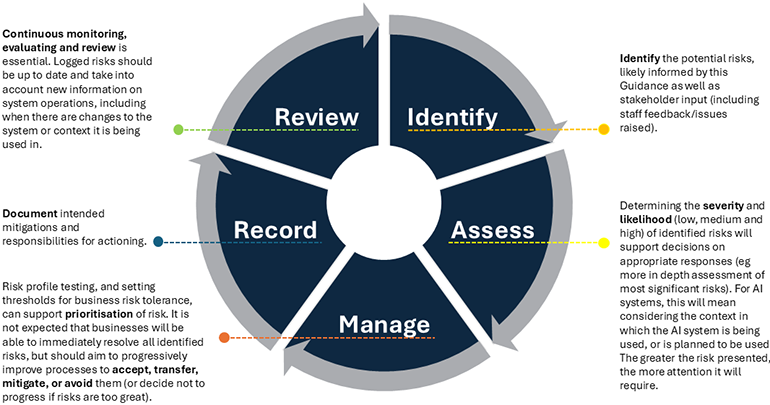

Risk management processes generally will move through the following steps throughout the AI life cycle to support integration or development of AI, and are most effective if started early and performed regularly.

Artifical intelligence life cycle

Text description of infographic: AI life cycle

Guidelines are available to support implementation of risk management processes more generally in your organisation, which can be built on for AI contexts, such as:

- the risk management plan worksheet from Business.govt.nz, as part of guidelines for laying the groundwork for good governance

Risk management plan worksheet [DOCX 1.5 MB](external link) — Business.govt.nz

- the Stats NZ Algorithm Impact Assessment toolkit (designed for government agencies but also helpful for business)

Algorithm Impact Assessment toolkit(external link) — data.govt.nz

- international standards and frameworks, including ISO/IEC 42001: 2023: Information technology – Artificial Intelligence – Management system and other country frameworks listed at Appendix 3.

ISO/IEC 42001:2023(external link) — ISO

- other sector-specific risk management strategies and frameworks that may be available (some are listed in Other Guidance and resources).

Additional tips for AI risk management

Recordkeeping

As with most business development, harnessing AI successfully requires strategic thinking, planning and, importantly, documenting of business actions and processes.

Recordkeeping and documentation supports accountability, helping you to understand where and how decisions have been made. AI-specific documentation like AI model cards are useful to record (for builders and developers for maintaining and communicating, and to users and deployers for understanding) detailed model purpose, data sources, training methodologies, performance metrics and potential biases.

For larger businesses, several technologies and digital solutions may already be logged in a central place. Businesses may need to be able to refer to where AI is being used and how, to help with answering customer queries, for example, or complying with any required audits.

It’s valuable to follow good documentation practices throughout the AI lifecycle.

* Indicates content specific to GenAI